publications

generated by jekyll-scholar.

2025

-

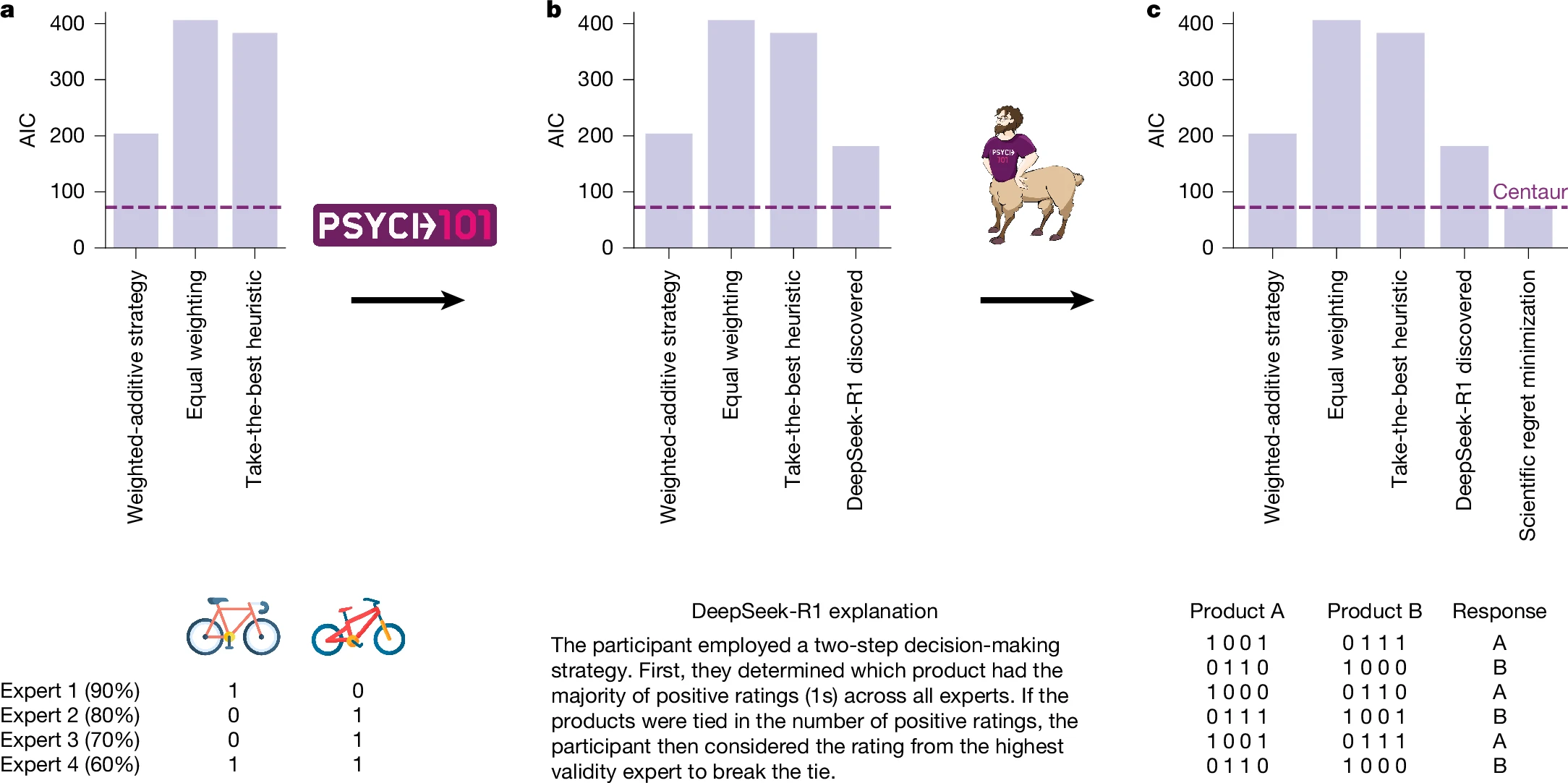

“Captured” by centaur: Opaque predictions or process insights?Kieval, Phillip H, and Buckner, CameronJournal of Experimental Psychology: Animal Learning and Cognition Sep 2025

“Captured” by centaur: Opaque predictions or process insights?Kieval, Phillip H, and Buckner, CameronJournal of Experimental Psychology: Animal Learning and Cognition Sep 2025Binz et al. (2025) describe several ways that Centaur—a new computational model that “captures” human behavior better than alternatives—can help develop a new unified theory of cognition. In this commentary, we evaluate several of these roles in light of recent achievements and empirical data, recommending increasingly explicit scrutiny of the various modeling roles that Centaur might play in developing new explanatory theories of human cognition.

2024

-

Artificial AchievementsKieval, Phillip HintikkaAnalysis Sep 2024

Artificial AchievementsKieval, Phillip HintikkaAnalysis Sep 2024State-of-the-art machine learning systems now routinely exceed benchmarks once thought beyond the ken of artificial intelligence (AI). Oftentimes these systems accomplish tasks through novel, insightful processes that remain inscrutable to even their human designers. Taking AlphaGo’s 2016 victory over Lee Sedol as a case study, this paper argues that such accomplishments manifest the essential features of achievements as laid out in Bradford (2015). Achievements like these are directly attributable to AI systems themselves. They are artificial achievements. This opens the door to a challenge that calls out for further inquiry. Since Bradford grounds the intrinsic value of achievements in the exercise of distinctively human perfectionist capacities, the existence of artificial achievements raises the possibility that some achievements might be valueless.

2022

-

Permission to believe is not permission to believe at willKieval, Phillip HintikkaSynthese Aug 2022

Permission to believe is not permission to believe at willKieval, Phillip HintikkaSynthese Aug 2022According to doxastic involuntarism, we cannot believe at will. In this paper, I argue that permissivism, the view that, at times, there is more than one way to respond rationally to a given body of evidence, is consistent with doxastic involuntarism. Roeber (Mind 128(511):837–859, 2019a, Philos Phenom Res 1–17, 2019b) argues that, since permissive situations are possible, cognitively healthy agents can believe at will. However, Roeber (Philos Phenom Res 1–17, 2019b) fails to distinguish between two different arguments for voluntarism, both of which can be shown to fail by proper attention to different accounts of permissivism. Roeber considers a generic treatment of permissivism, but key premises in both arguments depend on different, more particular notions of permissivism. Attending to the distinction between single-agent and inter-subjective versions of permissivism reveals that the inference from permissivism to voluntarism is unwarranted.

-

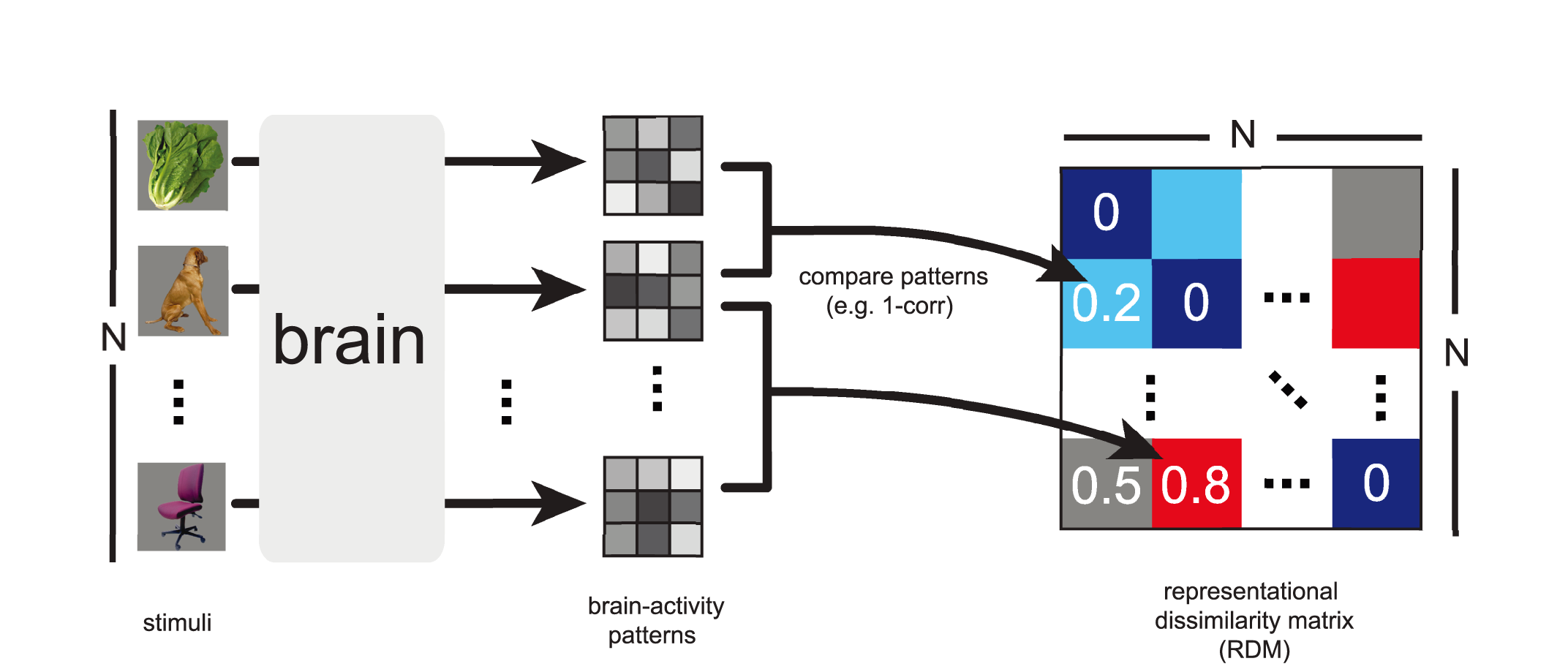

Mapping representational mechanisms with deep neural networksKieval, Phillip HintikkaSynthese May 2022

Mapping representational mechanisms with deep neural networksKieval, Phillip HintikkaSynthese May 2022The predominance of machine learning based techniques in cognitive neuroscience raises a host of philosophical and methodological concerns. Given the messiness of neural activity, modellers must make choices about how to structure their raw data to make inferences about encoded representations. This leads to a set of standard methodological assumptions about when abstraction is appropriate in neuroscientific practice. Yet, when made uncritically these choices threaten to bias conclusions about phenomena drawn from data. Contact between the practices of multivariate pattern analysis (MVPA) and philosophy of science can help to illuminate the conditions under which we can use artificial neural networks to better understand neural mechanisms. This paper considers a specific technique for MVPA called representational similarity analysis (RSA). I develop a theoretically-informed account of RSA that draws on early connectionist research and work on idealization in the philosophy of science. By bringing a philosophical account of cognitive modelling in conversation with RSA, this paper clarifies the practices of neuroscientists and provides a generalizable framework for using artificial neural networks to study neural mechanisms in the brain.